[MATH] The Optimal Time to Harvest Crops in Minecraft

| GitHub Repo 👾 | YouTube Video 📺 | Scope: ⭐⭐⭐ |

A Minecraft Optimization Problem

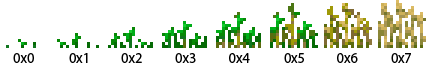

Harvesting crops in Minecraft is a fundamental mechanic in the game. Crops progress through a series of growth stages, until a last stage is reached. When a crop is harvested at this last stage, it will yield an item. Harvesting a crop before its last stage yields no items.

Let’s call the number of growth stages $n$. For example, wheat has $n=8$, as it has $8$ growth stages.

For a specific crop’s number of growth stages, refer to the Minecraft Wiki.

Most players wait until a large majority of their crops are ready before harvesting. But if we were to build a machine that waits some specific time before harvesting, what is the best time for the machine to wait? Clearly the value is dependent on $n$, but what are the specific values?

This periodic farming strategy may seem antiquated in the Minecraft versions that have observers (blocks that can detect a random tick of a specific block), but this is actually not the case. Ilmango, a well-known Minecraft automated farm designer, points out in this YouTube video that Amethyst Clusters are a good candidate for this type of farm. So this question is important for many Minecraft versions, new and old. But to answer it we first need to examine crop growth itself.

How Do Minecraft Crops Grow?

Every 0.05 seconds, Minecraft updates its game state. This update is called a tick. Every tick, the following procedure runs for every subchunk loaded by a player:

- Randomly select $m$ blocks within the subchunk (with replacement)

- For each block that is selected, do the associated random tick event

For this process, the chosen blocks are said to be random ticked, and the value $m$ is (confusingly) called the tick speed. Note that Minecraft has $m$ set to $3$.

Most blocks in the game do nothing when random ticked, but certain blocks do special behaviors. Importantly for us, crop blocks advance their growth stage when random ticked. We can now mathematically write down our optimization question.

Formalizing the Problem

The number of times a block is random ticked after $t$ ticks follows a Binomial distribution $X_{mt,p} \sim B(mt,p)$, where $p=2^{-12}$ is the probability that a specific block is chosen in a subchunk.

Then our problem is the same as calculating the following function.

\[\Lambda_{m,p}(n) = \max_{t} \frac{1}{t}\mathbb{P}(X_{mt,p} \geq n) = \max_{t} \frac{1}{t}\left(1-(1-p)^{mt-n+1}\sum_{k=0}^{n-1}p^k \binom{mt}{k}\right).\]Note that for Minecraft we are specifically interested in $\Lambda_{3,2^{-12}}(n)$.

A Useful Approximation

Due to $p$ being small, we can approximate $X_{mt,p}$ with a Poisson random variable $Y \sim \text{Poisson}(t)$. We then define the corresponding function for $Y$, namely

\[\lambda(n) = \max_t \frac{1}{t}\mathbb{P}(Y \geq n) = \max_t \frac{1}{t}\left(1-e^{-t}\sum_{k=0}^{n-1}\frac{t^k}{k!}\right)\]We then have, for large $n$ and small $p$, that

\[\Lambda_{m,p}(n) \approx \frac{1}{mp}\lambda(n).\]Direct Computation

We want to simply our expressions for $\Lambda_{m,p}(n)$ and $\lambda(n)$ by getting rid of the $max$ in both expressions. To do this, we take the derivative with respect to $t$ and set the expression to $0$. For $\Lambda_{m,p}(n)$ this gives

\[\begin{eqnarray*} 0 & = & (1-p)^{n-1}e^{-m\ln(1-p)t} + \frac{(p)^{n-1}}{(n-1)!}\ln(1-p)(mt)^n -1 \\ & + & \sum_{k=1}^{n-1}\left(\frac{\ln(1-p)p^{k-1}}{(k-1)!} + \sum_{i=k}^{n-1}((k-1)s(i,k)+\ln(1-p)s(i,k-1))\frac{p^i}{i!}\right)(mt)^k, \end{eqnarray*}\]The above equation is critical, as it says that for a given $n$, the optimal time to wait is the $t>0$ so that the above expression is true.

Similarly for $\lambda(n)$, we have

\[0 = e^t - \frac{t^n}{(n-1)!} - \sum_{k=0}^{n-1}\frac{t^k}{k!}.\]Numerical Results

Both of these equations can easily be solved numerically using computers. Running root finding algorithms for small $n$ gives the following table

| $n$ | $\lambda(n)$ | $(2^{12}/3)\lambda(n)$ | $\Lambda_{3,2^{-12}}(n)$ | Opimal Tick |

| 1 | 0 | 0 | 0 | 1 |

| 2 | 1.7932821329 | 2448.4278721205 | 2449.1597630796 | 2449 |

| 3 | 3.3836342829 | 4619.7886741889 | 4620.6492653516 | 4621 |

| 4 | 4.8812774913 | 6664.5708681839 | 6665.5420888695 | 6666 |

| 5 | 6.3225055510 | 8632.3275790109 | 8633.3997579089 | 8633 |

| 6 | 7.7245836004 | 10546.6314757967 | 10547.7982629752 | 10548 |

| 7 | 9.0973444026 | 12420.9075576501 | 12422.1643509140 | 12422 |

| 8 | 10.4470306813 | 14263.6792235702 | 14265.0224812591 | 14265 |

| 9 | 11.7779066065 | 16080.7684866791 | 16082.1953698680 | 16082 |

| 10 | 13.0930424983 | 17876.3673576602 | 17877.8755221598 | 17878 |

| 11 | 14.3947389019 | 19653.6168474178 | 19655.2043132205 | 19655 |

| 12 | 15.6847741726 | 21414.9450036956 | 21416.6100682348 | 21417 |

| 13 | 16.9645579170 | 23162.2764093484 | 23164.0175872686 | 23164 |

| 14 | 18.2352307260 | 24897.1683511953 | 24898.9843310297 | 24899 |

| 15 | 19.4977315702 | 26620.9028372304 | 26622.7924493235 | 26623 |

| 16 | 20.7528448428 | 28334.5508254074 | 28336.5129688193 | 28337 |

Notice how close the exact and approximate solutions are! They often only disagree by a single tick. The final column lists the best tick to harvest a crop of a specific growth stage.

Let’s repeat the calculations done in IlMango’s video for amethyst clusters. Amethyst clusters are an $n=4$ crop, that grow on the sides of blocks (ie. there is a $1$ in $6$ chance that a specific cluster grows). There is an additional rule where if the block is random ticked there is only a $1$ in $5$ chance that the growth stage will advance. This results in an optimal time of

\[\Lambda_{3,2^{-12}}(4) * 6 * 5 = 199966 \text{ ticks} \approx 167 \text{ minutes}\]which is what IlMango arrives at in his video.

The above table should be sufficient for any periodic Minecraft farms at the time of writing, as all crops in the game have a small number of growth stages. But what happens if there is a crop introduced to the game that has a much larger growth rate?

An Academic Exercise

The computation of $\Lambda_{m,p}(n)$ and $\lambda(n)$ for large $n$ can be difficult even for modern root finding algorithms. However, one can show that the leading terms of $\lambda(n)$ are as follows.

Theorem 1. The leading terms of $\lambda(n)$ are

\[\lambda(n) = n + \sqrt{n\ln\left(\frac{n}{2\pi}\right)} + \dots\]This gives an asymptotic estimate for $\lambda(n)$, which is an estimate that decreases in percent error as $n$ increases.

Proof. We begin with a definition of $\lambda(n)$ that allows for continuous $n$, namely

\[1 = \int_0^1 e^{(n\ln{(1-u)}+\lambda(n)u)} du.\]We can use Laplace’s method to derive leading terms of $\lambda(n)$.

\[1 = \int_0^1 e^{(n\ln{(t)}+\lambda(n)(1-t))} dt = \int_0^1 e^{nf(n,t)}dt\]there $f(n,t) = \ln{(t)}+\frac{\lambda(n)}{n}(1-t)$. We start by writing a taylor explansion for $f(n,t)$.

\[f(n,t) = \ln \left(\frac{n}{\lambda(n)}\right) + \frac{\lambda(n)}{n} -1 + \sum_{k \geq 2}\left(\frac{\lambda(n)}{n}\right)^k \frac{(-1)^{k-1}}{k}\left(t-\frac{n}{\lambda(n)}\right)^k\]The above equation is valid for $t \in (\frac{n}{\lambda(n)}-1, \frac{n}{\lambda(n)}+1)$. As $\frac{n}{\lambda(n)} > 0$, we have $(0,1) \subset (\frac{n}{\lambda(n)}-1, \frac{n}{\lambda(n)}+1)$ and can integrate over the region $(0,1)$. We can then write

\[\int_0^1 \exp{(nf(n,t))} dt = \left(\frac{n}{\lambda(n)}\right)^n e^{\lambda(n)}e^{-n}\int_0^1\exp{\left(n\sum_{k\geq2}\frac{(-1)^{k-1}}{k}\left(\frac{\lambda(n)}{n}t-1\right)^k\right)}dt\]Let us call the last integral

\[I(n,\lambda(n)) = \lim_{n\to\infty} I_m(n,\lambda(n)) = \lim_{n\to\infty} \int_0^1\exp{\left(n\sum_{k=2}^m\frac{(-1)^{k-1}}{k}\left(\frac{\lambda(n)}{n}t-1\right)^k\right)}dt\]Following Laplace’s method, we approximate $I$ with $I_2$. We can then say that, for $n \to \infty$, that

\[\left(\frac{n}{\lambda(n)}\right)^ne^{\lambda(n)}e^{-n}I_2(n,\lambda(n)) \to 1\]or

\[n\ln(n) -n\ln(\lambda(n)) + \lambda(n)-n+\ln(I_2(n,\lambda(n))) \to 0.\]We then suppose that $\lambda(n) = n + n\delta_1(n)$. We can then say that $\delta_1(n) \to 0$ as $n \to \infty$.

\[-n\ln(1+\delta_1(n)) + n\delta_1(n) + \ln I_2(n,\lambda(n)) \to 0\]What can be said about $I_2$? We have

\[I_2(n,\lambda(n)) = \int_0^1\exp{\left(-\left(\frac{\lambda(n)}{\sqrt{2n}}t-\sqrt{\frac{n}{2}}\right)^2\right)}dt\]and written in terms of common functions

\[I_2(n,\lambda(n)) = \sqrt{\frac{\pi n}{2}}\frac{1}{\lambda(n)}\left(\text{erf}\left(\sqrt{\frac{n}{2}}\delta_1(n)\right) + \text{erf}\left({\sqrt{\frac{n}{2}}}\right)\right).\]Therefore, we have

\[-n\ln(1+\delta_1(n)) + n\delta_1(n) +\frac{1}{2}\ln\left(\frac{\pi}{2}\right) + \frac{1}{2}\ln(n) - \ln(\lambda(n)) + \ln(2) \to 0\]as \(\text{erf}\left(\sqrt{\frac{n}{2}}\delta_1(n)\right) + \text{erf}\left({\sqrt{\frac{n}{2}}}\right) \to 2\) quickly. We can then write

\[-n\ln(1+\delta_1(n)) + n\delta_1(n) - \frac{1}{2}\ln(n) -\ln(1+\delta_1(n)) + \frac{1}{2}\ln(2\pi) \to 0.\]We can again use the Taylor expansion of $\ln(1+x)$ to get the further approximate

\[\frac{n\delta_1^2(n)}{2} + \frac{1}{2}\ln\left(\frac{2\pi}{n}\right)-\delta_1(n)+\frac{\delta_1^2(n)}{2} \to 0.\]Finally, completing the square gives the following expression for $\delta_1(n)$

\[\delta_1(n) \approx \frac{1}{n+1}\left( 1 + \sqrt{1+(n+1)\ln\left(\frac{n}{2\pi}\right)}\right)\]or ignoring the $-\ln(1+\delta_1(n))$ term gives the cleaner but less accurate expression

\[\delta_1(n) \approx \sqrt{\frac{1}{n}\ln\left(\frac{n}{2\pi}\right)},\]which completes the proof. The above theorem and proof were completed with the help of my academic advisor Professor Iddo Ben-Ari.

On the opposite end of the spectrum, can any of the smaller values be written exactly?

Another Academic Exercise

Though slightly contrived, it turns out one can write $\lambda(2)$ and $\lambda(3)$ exactly using Lagrange Inversion. Using this technique along with certain identities for Partial Bell polynomials gives the following results.

\[\lambda(2) = \frac{e^2-3}{e^2-5} - \sum_{n \geq 1}f_n \left(\frac{e^2-7}{e^2-5} \right)^{n+1},\]where

\[f_n = \sum_{i=1}^{n}\sum_{j=0}^i\sum_{k=0}^j\frac{(-1)^{n+k}(n+i)!S(n-i+j+k,k)e^{2j}}{(n+1)!(i-j)!(j-k)!(n-i+j+k)!(e^2-5)^{i}}.\]Similarly,

\[\lambda(3) = \frac{3e^4-71}{e^4-29} - \sum_{n\geq1}g_n\left(\frac{e^4-45}{e^4-29}\right)^{n+1}\]where

\[g_n = \sum_{i=1}^n\sum_{j=0}^n\sum_{k=0}^i\sum_{\ell=0}^{k-i}\frac{(-1)^{n-\ell}(n+i)!13^{2k+j-n}S(j+\ell,\ell)e^{4(j-k)}}{(n+1)!(j+\ell)!(i-k-\ell)!k!2^k(e^4-29)^i}\binom{k}{n-j-k}.\]Note that above has $\binom{k}{n-j-k}=0$ if $k < n-j-k$ and $S(a,b)$ is the $(a,b)$-th Stirling Number of the second kind, which is defined as $S(a,b) = \frac{1}{b!}\sum_{k=0}^b(-1)^k \binom{b}{k}(b-k)^{a}$. Both sums converge slowly and so are of little use.

Further Questions

-

Are there other constants in the main table that can be written exactly?

-

The process for oxidation in copper blocks is different than other random tick events. What are the optimal times for harvesting a specific oxidation stage for copper?

Thank you for reading!